One of the first literary elements that students understand as they begin to read is character. They learn that a character is a person, animal, being, or thing moving the story along a plot line. Many of the characters in books they can read independently have recognizable traits:

- The Pigeon in Don’t Let the Pigeon Drive the Bus is always stubborn and demanding;

- Horrible Harry of the Horrible Harry Series is always rebellious.

- Jack Smith of The Magic Treehouse Series is always smart and courageous.

- Judy Moody of the Judy Moody Series is always determined and…well, moody.

The characters in series books at these elementary grade levels (K-3) are predictable. Students are able to practice reading because they are familiar with characters such as Ramona, Dog Man, Nate the Great, Captain Underpants, Amelia Bedelia, and Frog and Toad.

These characters’ thoughts and feelings may shift when they react to different problems or conflicts. But these characters do not change. They are static; they are not complex characters.

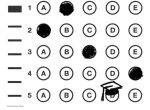

The dominance of static characters in the elementary grades can be an issue when students are faced with an assessment question:

“How did [the character] change? What caused this change?”

This particular question comes from the Teacher’s College Grade 3 Reading Units of Study, but other literacy programs also ask about character change.

It is important to understand that the word “change” is synonymous with a radical, transformative process. The etymology of the word change (c.1300) is “to undergo alteration, become different.” The Collins dictionary defines change as:

1. to put or take (a thing) in place of something else; substitute for, replace with, or transfer to another of a similar kind2. to give and receive reciprocally; exchange; switch3. a. to cause to become different; alter; transform; convert

The kind of change in character that matches this transformative meaning is difficult to find at the lower reading levels. A well-crafted character who “converts” or “alters” in a low-level text is unusual for any combination of reasons including choices in brevity, vocabulary, and text structure.

For most students who are reading at or below a Grade 3 reading level, there are few complex texts that they can independently read to determine a character change. Instead, the characters in the book series that are favored by students such as Geronimo Stilton, The Boxcar Children, or Ivy and Bean, are intentionally crafted by authors so the characters remain the same while the plot or the settings change.

There are exceptions, of course. The Grinch in Dr. Seuss’s classic story (not film) The Grinch that Stole Christmas undergoes a transformational character change, but at the Lexile 731/level P, the book is most often used in the classroom as a read-aloud. There are few mentor texts like the Grinch that can give students the opportunity to practice for an assessment on character change.

The limited number of stories with complex characters at the lower grade levels means that students do not have enough independent practice on their own with this concept. The leap from the predictable characters in a series (Babymouse, Henry& Mudge, Little House on the Prairie) to the kind of complex character change that is found in Maddie in Eleanor Estes The Hundred Dresses or in Jonas in Lois Lowrey’s The Giver can be a high bar for many elementary or even intermediate readers.

So, why ask students at the lower elementary levels about character change at all? The phrase “character change” does not appear in the ELA Common Core State Standards (CCSS) until Grade 6 when students should:

“…describe how a particular story’s or drama’s plot unfolds in a series of episodes as well as how the characters respond or change as the plot moves toward a resolution. (RL.6.3)”

Instead, the CCSS states students in grades 3-5 should be able to:

- describe characters (traits, motivations, or feelings) and “explain how their actions contribute to the sequence of events” (RL.3.3 )

- describe character, setting, or event in a story or drama, drawing on specific details in the text ( a character’s thoughts, words, or actions (RL.4.3)

- compare and contrast two or more characters, settings, or events in a story or drama, drawing on specific details in the text (RL.5.3)

Maybe the intent of asking students at the lower elementary levels about character change is to prepare students for the complex texts they will read in the upper grades. If this is the case, there should be some consideration of what resources could be used to support this kind of character study, especially as most books in elementary reading libraries feature characters that are purposefully crafted to be static. The characters are designed to be familiar to allow students to practice fluency and accuracy. Asking students to find evidence to show a character change when there is no change is an inauthentic exercise.

There is also a danger in assessing a student’s understanding of character change too soon in their literary lives. Teachers also should be careful not to elevate what is a shift in a character’s attitude to be equal to a change in character. Guiding elementary students to answer “character change” by using evidence that shows how a character may think or feel differently can muddy a later understanding of what character change really means.

Students should not have to answer assessment questions that ask for evidence of character change where there is none. Instead, at the lower grade levels, students should be ready to answer assessment questions that draw attention to the differences in a character’s attitude, thoughts or feelings:

- “How did the character react to the problem?”

- “What caused the character’s reaction?”

- “What are the character’s thoughts and feelings now?”

- “Does this character have a different attitude?”

Most of the books in elementary school libraries can support these kinds of questions, which are closely aligned to the ELA CCSS for grades K-5. Finding the evidence that shows a difference in a character’s attitude, thoughts, or feelings is a task that elementary students can do in both mentor texts and in their leveled reading.

Identifying a character’s shift in attitude can also help students better understand the theme or message of the book, for example:

“Yes, this is where I want to be! The Circus is the place for me.” –Put Me in the Zoo.

“Pete, you don’t need magic sunglasses to see things in a new way! Just remember to see the good in everyday!” Pete the Cat and the Magic Sunglasses

“And for all I know he is sitting there still, under his favorite cork tree, smelling the flowers just quietly. He is very happy.”-The Story of Ferdinand

Of course, there are those iconic characters who have been designed to be so static that a question about a difference in attitude, thoughts or feelings is pointless. Just ask Max of Where the Wild Things Are. He is still in his wolf suit when he returns home “where he found his supper waiting for him and it was still hot.” Continue Reading…